It’s fairly safe to say that quite a lot of test automation efforts fail. It is also very safe to say that without test automation an agile team fails. So how can you make sure that while doing agile your test automation will not fail and thus your agile team will not fail? One of the ways to answer this question is by looking at why test automation often fails within agile environments.

When I am talking about test automation within this post, I am referring to testing that is done to reduce the amount of manual regression work, the so called functional test automation or automatic regression testing.

Moving target

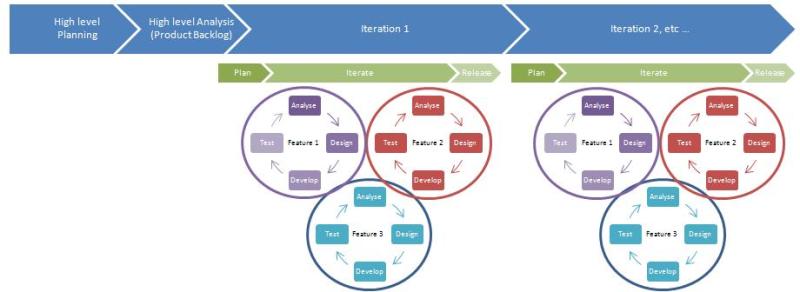

Test automation quite often does not receive the attention it needs and deserves, also in agile teams. Quite some test automation efforts start off too late and without the appropriate preparation, resulting in organic test automation driven by a moving target. The moving target is the system under test which, in agile, is constantly in flux. Each sprint new features are added, bugs are fixed and quite often it is not clear at the start of a project where it is going to end up. Writing automated scripts against such a flexible environment which will stand the test of time, is difficult. It is even more difficult when the base on which automation is done is weak.

Quite often test automation runs behind on what is being delivered within an iteration, this is somewhat logical, considering that it is difficult to test, let alone automatically test what has not been built yet. Ideally while manually testing the new feature(s) as a tester, you’re already pondering how to automate it so that you do not have to do the tedious work more than once. Given enough time within your iteration, you actually might be able to automate some of the features, from what I have seen thus far, generally not all features will be covered in test automation within one iteration. So if these tests are not all automated, what happens to them in the next iteration? Are they omitted? Are they picked up and automated retrospectively?

If you do not keep track of what has been automated during an iteration for both your current iteration and your previous iteration, how can you rely on your test automation? You can’t be sure what exactly it is going through, so a bug can easily get through the net of your automated tests.

This moving target you are testing needs to be traced and tested solidly, repeatedly and in a trust-worthy way!

Definition of Done

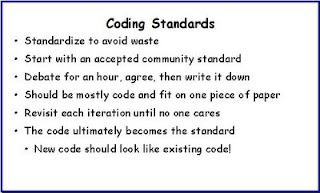

In the majority of the DoD’s I have seen, one of the items is something referring to “tests automated”. The thing I have thus far not seen however, is the team adding as much value to the automation code as they do to the production code. Quite a lot of DoD’s refer to certain coding standards, however these standards often seem to not apply to functional test automation. Isn’t your functional automation code also just code? If so, why then should this not be covered in code reviews, be written according to some useful guidelines and standards and hopefully use a framework to make the code sustainable?

Test automation is just writing code

I have seen several automation efforts going on within agile teams where test automation was done without proper thinking having been put into it. A tool was chosen, based on what exactly other than members of the team having heard of it or having had good experiences with the tool. No base or framework to keep the code clean chosen. Since you are writing code, you should follow the same rules as the rest of the software developers. Don’t think your code, since they are merely tests, should not be hooked up to some form of framework. If you want to make your tests survive a few iterations, considering reuse of your code would be logical.

By the way, coding standards do not need to be too complicated. In 2009 “Agile in a flash” came up with a coding standard that could work for all languages and for most environments:

All of the above mentioned points are “logical” when writing an application which is supposed to go into production. However when looking at a lot of (agile) projects, these logical “best practices” seem to be totally forgotten when it comes to test automation.

Succeed in test automation

So, how do you succeed in your test automation? How do you make it work? The answer seems clear to me: test automation is not like writing code, it is equal to writing code. Since it is the same, treat it the same way!

Do your code reviews, follow a form of a standard, use a (simple) framework to make life easier in writing tests, create reusable modules in your automation code. In other words, treat your functional test automation with the same respect as your production grade code. Who knows, you might want to run your tests against your production environment some day! In setting up your initial test automation environment and framework, don’t be shy and ask the developers in your team for tips, tricks and suggestions. They quite likely have gone through those setup steps more often than you have, so use their knowledge. Asking them for their insights and ideas not only helps you, it also helps them feel more responsible for doing their 5 pennies worth on the test automation side. They will get a clearer idea of what you intend to achieve, so they might also be more willing to help out keeping their code testable, they might even enjoy helping you write the testscripts!

Resources

Some informational resources where you can find some ideas on how to setup the test automation framework:

Hi, in response to “In the majority of the DoD’s I have seen, one of the items is something referring to “tests automated”. The thing I have thus far not seen however, is the team adding as much value to the automation code as they do to the production code.” I’d like to say that our DoD’s are ‘stories are accepted if they’re automated’. This brings us to several consequences: the whole team’s responsibility (developers and testers), unwillingness to work with ‘legacy’ bugs (of previous sprints), feeling of a race. The level of automation of the current sprint’s stories is defined as ‘cover all that the story states (a must) and all the other if possibly’.

Good this practice or not good enough, there’s no discussion as it’s a requirement.

The test code is never of the same size as the production code, and never should be, because many things are programmed in higher-level languages like PowerShell. Using of languages that are faster to implement the funcitonality helps us to get ahead of developers’ work, even though there are more developers than testers on the project.

Alexander, the languages indeed are another thorn in my eye. Quite often I see test autoamtion happening in the same language as the application it tests was built in because “that just makes sense”. I fully agree with you that for automation you should always use a language with which the testers are comfortable and which lends itself for easy and rapid development and high levels of reuse.

One way we generally manage to get to that level is by pasting an apbstraction layer on top of the driver, that makes the scripting a lot simpler and thus faster to build.

You really should, within reason, use an existing tech stack that has good adoption and is already maintained within your organization. Obviously if you work at a company that makes some sort of high performance embedded devices and writes everything in machine code for custom hardware, it’s not really feasible, but otherwise, you should probably bite the bullet and write your automation so it will run under the same OS type and version, using the same language and language version, with as many of the same 3rd party libraries as is feasible as product developers are already using. Yes it may save time up front to chose a different language for automation, but there are a number of reasons it’s a bad long term choice.

The first reason is maintainability and scalability of your test infrastructure. Sure if you run all your tests of a local machine or from a shared machine that the QA department fully owns and maintains, but more than likely, at some point, you are going to be looking at deploying to a VM, for a shared deployment environment for ad-hoc automation running and test development, but even more so for integration with a Continuous Integration system. At that point, unless you are in a small organization, you are talking about working with a systems or ops group who maintains deployment images and builds out machines and VMs for you. If you’ve rolled your own QA tech stack, now you are introducing a new image for the ops guys to build out and maintain. And since you are not working on product code, any maintenance for your image (upgrading the OS version, or your VM/interpreter version, or getting a new tool included in your deployment image) becomes a low priority task.

Second, using a stack that is already known within your organization means you start off with the benefit of the accumulated institutional knowledge of all the developers working against that stack. If you are using your own configuration and tools, when you run in to problems with your automation suite, your first resource becomes Google, not a human.

Third, developer buy in. If you are using the same stack as the product team you are working with, they will be much quicker to get in and up to speed with your automation suite. This means a more productive 2-way conversation between dev and test, an increased likelihood that dev will be willing and able to step in and help out with test development near the end of a sprint when you really need them to, and an increased level of productivity and code quality of the tests they write when they do jump in.

Lastly, there’s perception, which is really important if you want to develop effective processes, unfortunately. If you come in to the discussion saying that test development needs to be considered on the same level as product development, but then turn around and say that you need to use a different tech stack because Java/C/WhatHaveYou is too hard to write, you are putting yourself in the position of being perceived as technically inferior before even writing your first line of code.

Of course, using a product development stack is not feasible for every QA org, both for technical and skill deficit reasons. But if possible, you really should try to stick with a tech stack that already has adoption and support within your company.

> Stories are accepted if they’re automated

Hmm, sounds familiar 🙂

> The test code is never of the same size as the production code, and never should be

Never say never. Imagine a test kit for a heart pacemaker or a nuclear reactor 🙂

When I talk to clients about how to integrate test automation into their development process, I like to explain (to developers) that code is not the same as a feature. Yes, you write code to implement a feature, but your customer does not equate the two.

Your customer only acknowledges that a feature exists when they’re using it to do things they want to accomplish. A prerequisite for that is that the feature actually helps them accomplish their goal. A prerequisite for that is that the feature is well-designed. Then the design needs to be tested. The fact that there happens to be code involved, in the creation of the feature, and possibly in the testing of the feature, is completely unimportant to the user.

The upshot is, a coding task is only finished once the customer uses the feature implemented by the code, and the feature does the job it was meant to do. If you start from any other perspective, you’re not going to build software that works.

But if you start from that perspective, testing automatically becomes part of the development activity, and test code automatically becomes part of a coding task.

Funny, I fully agree that code is not the same as a feature, yet I never pondered it out loud. Makes total sense indeed! Going to see if that works to convince a somewhat stubborn customer that automation can work for them and it will if they do it right.

Nice post Martijn. I agree that, in order to write efficient test automation, actual coding should be involved. Recording tools are, especially in agile environments, of little use.

Recording tools can be very useful, however usually I use them to get some inspiration on how to approach an object. Once coded we throw the recorded stuff away again since it indeed is usually not quite what you would want to keep reusing.

Also keep in mind that depending on your goal record/playback can be exactly what you need since it can have the strength and flexibility to quickly automate something simple, repeatable and boring (throwaway code) you need to get through everytime you are trying to reach your test automation object. Think for example of using a quickly recorded login function for while writing out your automated routines or simply for when during a sprint or test phase you need to get past that login screen before you can do anything.

In other words, pick your goal and aim your automation at that.

Just getting my dev team to understand this now. Amazing how simple it can be once they realize that the automated tests are just another task in writing the feature code. We’ve just started using Cucumber and the QA folks are able to use their native experience writing test “cases”. The devs then use webrat to implement the test. Having both these teams and the product owner sitting in a planning meeting makes the development much more transparent.

Agile is the perfect ground for rapid changes in what you are testing. I have found that testing under these conditions can be wasteful because you may be retesting things that did not change, as well as the new stuff. Of course understanding what changed is also problematic.

The answer: Dynamic Change Analysis (DCA) is a method by which change can be annotated by comparing the binaries. This method will tell you what is New, what is Gone, and what has changed. You can then map that to what tests need to be run, and save a lot of time because you are only testing what has changed.

Now this part is easy to understand, but in practice you really have no way to do this because you are missing a valuable piece to the equation. And that is, what code was touched during my tests? Having this information will allow you to connect the dots. This is because if you know what code was touched during a test case run, and you now know what code has changed in the new build, then you can now map code to test case and preselect what to run next on the new build of software.

I know of a company that has such a solution and it is incredible how much time this can save your already bulging test timelines. Wind River is that company, and I would recommend checking out their Test Management solution to save you a bundle of time. This product is also a great Test Framework with low level automation capabilities, and a host of other great features.

Don, this sounds like a very interesting approach and I will definitely check that out!

Well, what code was touched during a test run is pretty easy to detect, to be honest. Sure, it depends on the exact language used in the project.

But instrumenting code for getting an idea which branch was executed is fairly trivial to achieve. GCC, for example, includes gcov, which will print out annotated source code files with how often each line of code was reached. Achieving what you want, then, is merely a question of running each test case separately, so that coverage results of two test cases don’t mix in the gcov output. There are similar tools for other languages freely available.

Similarly, any version control system would tell you what code was changed between two test runs, if you generate testable builds in a sane manner.

What I’ve done in the past is set up buildbot to run unit tests test case by test case with gcov output as an HTML file included in the buildbot logs. Very easy to drill down from a failed build to the test case & responsible code changes from there. It’s very kludgy compared to an integrated solution, granted, so Wind River presumably improves on that greatly!

My team started out with this philosophy, at least at the unit and API levels, and used our automated tests to drive coding. Worked great. At the GUI level, it took us longer to realize that we had to put the same care into our GUI test design, and treat our GUI test scripts as production code as well. It’s made a huge difference. Please keep spreading this message!

I have been promoting the idea for quite a while, great thing about the concept of treating your test code the same as production code is that it makes no different how development is done, you can always promote it since it will always give great results. Treating test code as something other than code has always been a sore thumb for me, that feels close to saying testing is not as important as developing, which simply gets me angry.

Thank you for the post. We have been agile for some time now, and using a recording tool in sprint has proven to be very time consuming. The maintenance costs of such tests are astronomical. Especially when the GUI keeps changing out from under me. I really appreciate your insight into this, as I am starting to look at testing for a new project and I wanted to get automation started on a good foot. This is going to be part of the recipe I will push for success. With a technical background, I can code, but I haven’t done so in a corporate environment, so the challenge to follow processes are going to be very interesting.

I did have a question for anyone here that is somewhat related:What reading would you recommend on automating the right amount? I am the only Test Developer on a team of 10 developers, and I need to be very careful to get the greatest efficiency with my time. I guess I am looking for the sweet spot of automation versus effort.

Olivier, let me start by saying I have never liked R&P tools, cost of maintenance is huge and effectiveness quite often low.

As far as finding the holy grail in “the right amount” I have yet to find one 🙂 One of the things that helps a lot is trying out to use a fairly lightweight driver to drive the tests and talk to your test subject, something like White, WebDriver or simply a JDBC implementation for that matter. On top of that we put an abstraction layer which makes for easy maintenance and especially for easy, fast and reusable scripting. This results in a highly flexible setup if done right, where you can easily and quickly write fairly simple (close to pseudo code) scripts to automate your functional testing. That way you have something which will make it easy to keep functional coverage high and the implementation and maintenance efforts relatively low.

Of course you can also build all your scripts directly against your driver, however in my experience this leads to a bit more cumbersome and higher maintenance scripts, which require more actual coding compared to the more scripted way of working with an abstraction layer in between.

Hope I gave you some useful ideas, if you want or need more information, please feel free to give me a shout!

Definitely some food for thought. I will use this as a guideline when choosing a direction and talking with my team. Thank you!

Hello Martijn,

I fully agree with you, test automation code should be treated exactly as regular production code. In fact, I’d like to stress that there should always be collective ownership of the test code as well as the production code. If, for example, a database / design change causes several automated tests to break, then those tests should be fixed with top priority, just like a regular broken build. Likewise, tests must be reviewed and refactored like regular code.

A team that embraces those practices and keeps them in mind when giving time estimates, should not see any backlog of missing (not yet automated) tests. In fact, when a defect is found, the first thing to do should always be: to write an automated test which exposes the defect.

I have seen your last statement go horribly wrong as well as work ideally. Automating every bug can cause very high overhead for your automation suite, lots of tests just there for one particular bug. So that strategy should be used moderately and wisely, otherwise you can end up having to maintain a lot of tests with little or no added value to your suite.

Nice article.

In the beginning, agile didn’t appear to fit all software testing tasks such as automation. It was a long road to include test automation as part of the product delivery process beginning with sprints that allowed the build up of a test framework that supports the longevity of automated test runs. The test framework was the corner stone to successful testing/automated testing within sprints. The evolution of this effort simplified the actual test effort by using exploratory testing a a method of becoming acquainted with new sprint features and finding tricky bugs. The result of this effort was definable test cases entered into a test management system with often small, contained upgrades to the framework to support new features if necessary. With web based testing, the tools allowed very generic test frameworks often upgrades were not necessary. So the process became exploratory, defining test cases, debugging them and then simply they became part of the automated test run. It is possible to have automated tests that have longevity. I have had automated tests that last as long as 8 years without re-engineering. Simply upgrading to add support for new features, refactoring to made code more efficient or even removing retired features.

Hi, I found your blog on LinkedIn Test Automation group. You might be interested in reading about our agile-driven quality control process at Eucalyptus, the leading open-source Cloud Infrastructure:

http://wp.me/p21Vr7-35

Thank you.

Reblogged this on Objects of Distraction and commented:

Some really useful observations made here, logical but often overlooked!

The key thing in your post is about treating your automation code just like your application code. Automation is a software development project unto itself, period. You are creating “testware” to drive the testing of your software. Also, especially in agile, automation needs to be thought about from the first second of the project and each iteration/sprint. Yes, it is tough to automate a moving target. But the framework and early components (descriptors of a page & its objects; a basic implementation of the Page Object Model in Selenium which in other tools is called a Component) can be built and beefed up later as things become more stable. Sure the automation may be a sprint behind, but as I just said you do some of the preliminary work in the first sprint.

Another thing to think about regarding “automation in agile” is the type of automation you’re going to be doing. Meaning will the team implement Unit Test automation at the code level, or API/Services automation at that level of the technology stack/layer, or at the GUI level itself. Each of these have different techniques and tools to accomplish the work. It takes a bit of foresight and planning to get it going right. And all-in-all the whole team will need to understand what is going on and buy-in to it.

The number one problem with automation, agile or not, is misconceptions and false expectations of the work. Including who should be doing it, and when.

I’ve been dealing with this for over 20 years. This is nothing new, but a constant headache that we need to detect early on and deal with before it really causes us pain.

Regards,

Jim Hazen